Software Applications

2022 / VR Application

Company:

Cortiical Limited

Role:

Unity Developer

Date:

Mid 2022-2023

Project Details

This project developed an immersive and highly effective Virtual Reality (VR) training program for instructing individuals in the safe and efficient operation of the Komatsu GS350, a heavy-duty construction and mining vehicle. The VR training program provides a realistic and risk-free environment for trainees to learn and practice the operation of this complex machinery, thereby enhancing safety, reducing training costs, and improving operator proficiency.

The purpose behind this project was that traditional training methods can be expensive due to the use of actual equipment and the need for specialized trainers. The VR program did significantly reduce training costs by minimizing the use of real equipment and associated resource requirements. Trainees are given the opportunity to practice various tasks, such as machine start-up and shutdown sequence, excavation, and maintenance, in a realistic VR environment. This information is then assessed and directly updates the customers workforce management systems via the internet. The great benefit of the program being developed for the Quest 2 means that it can maintain accurate simulation due and instant assessment of trainees without bulky extra equipment due to the Quests’ standalone functionality.

Company:

Frame Labs

Role:

Lead Developer

Date:

Early 2024-2025

Project Details

This project involved assisting in the completion of the Wolf Creek VR Demo which is a VR horror game set to the classic Australian horror film Wolf Creek. The work for Frame Labs was in assisting to complete the demo, this involved works in hand and object interaction, audio playback systems and scene management systems as well as other smaller tasks to bring the project to a high professional standard.

as well as working on several developmental works and as lead developer on two prototypes for Upcoming Frame Lab games Rytmos MR and The Lady Tradie.

Highlights

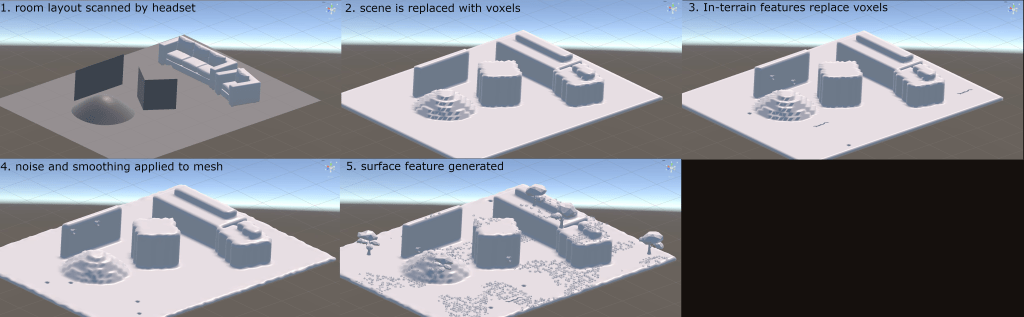

Voxelization and Meshing:

As part of an ongoing development for potential future projects I was tasked with taking an existing mix reality system that scans your play space and converts it into several voxels (3d variant of a pixel, or an equal division of 3D space) and using this data to generate a digital game scene. Part of this task was the creation of an algorithm to detect the non-neighboured face of the voxels to draw meshes. From here further work went into the techniques for mesh subdivision optimization. Through a number of combination and division steps mesh can be made to have few faces in areas of less importance and more faces in areas where higher detail is necessary to allow good performance without notice drop in quality. The second part of this process was taking the landscape mesh from a barren landscape to feature marked terrain. Using generative methods and several more voxel passing algorithms I created a system to create formations of varying voxel size and determined random location of the correct size to place them on the existing landscape. By using methods for random placement of pre-specified shapes the terrain generator will create a varied landscape on every new run regardless of whether the scanned room is the same or different.

Hand Gesture System:

As part of some in house development I had the chance to work on a finger/hand tracking system for use in future works. VR hand tracking is when your hands are scanned by the headset and rendered to the screen as virtual hands, these hands are read by the system and can be saved as a hand shape, if the hands take this shape again they can then be recognize by the gesture detection system, it also allowed for hands to be performed in pairs or alternating pairs instead of one gesture at a time, an example of this imagine your holding a bow you might make a fist with one hand and a pinch to grab the bowstring with the other. My role was to make some optimisation changes to the code base improving tracking performance . Firstly adding hand chirality to hand detection halving the required number of operations greatly. The next source of optimization was in processing ideology, the original system was a per-frame update which would have a knock on effect as every subsequent system would have to read this data per frame. This was replaced with an action/delegate system trigger only on change of gesture. The last form of optimisation came in the form of pose/action linking, in which the list of gestures to check for detection is limited by the current situation of the hand. A link is created between which gestures or state can pass to another gesture, meaning you can chain gestures so they only follow and look for gestures in a certain path instead of checking against an entire library of gestures vastly improving performance.

Finger grabbing:

Whilst working on the hand gestures it became clear that for a fully realized hand only XR experience the hand themselves will also need systems for interacting with their environment.

This system uses two point anchoring by taking fingertip collision in unison with thumb/palm collision to recognize if an object should be grabbed, this method allows for a number of common hand grip shapes at low processing cost. Finger tip collision coupled with a fully realized physical hand allows for a number of realistic hand interactions with the scene: you can push, pull, grab, poke, use your finger as a stylus and interact just about any way you would in real life. This interaction system is likely to see use in many future projects in different forms as fully AR systems like the Apple Vision Pro becomes more popular.